Introduction and Background - 2020 Workshop Report

In this guide

In this guideAdvances in biology, computer science and other related fields are paving the way for major improvements in how we evaluate environmental and public health risks posed by potentially toxic chemicals. The combined advances in discovery and clinical sciences, data science and technology have resulted in toxicity testing which has reached a pivotal transformation point known as part of the 4th industrial revolution (4IR). One of the major recent scientific advancements is the development of alternative toxicity testing and computer modelling strategies for the evaluation of hazard and exposure.

The volume of data produced in the world is growing ever more rapidly, from 33 zettabytes in 2018 to an expected 175 zettabytes in 2025 (IDC, 2018). The Department for Business, Energy and Industrial Strategy (BEIS) white paper on ‘Regulation for the Fourth Industrial Revolution’ notes that changes in technology are occurring at a "scale, speed and complexity that is unprecedented". The use of these new and changing technologies can help improve regulatory processes in several ways such as to improve the efficiency of data collection and exploit data already held by agencies to support better analysis and risk assessment.

Chemical Landscape

Over 350,000 chemicals and mixtures of chemicals have been registered for production and use worldwide. This is up to three times as many as previously estimated and with substantial differences across countries/regions. A noteworthy finding is that the identities of many chemicals remain publicly unknown because they are claimed as confidential (over 50,000) or ambiguously described (up to,70 000) (Wang et al., 2020).

As a result, thousands of chemicals are in common use, but only a portion of them have undergone significant toxicologic evaluation, and as more emerge it is important to prioritize the remainder for targeted testing (Judson et al., 2009). This is especially important for chemicals (found in food and in the environment) where sometimes little or no toxicological information is available.

Potency Estimation

Potency measures can be applied to chemicals for rapid identification of pharmacoactive hits or toxicological assessment and used as input data for prediction modelling or association mapping.

Overview of in silico toxicology

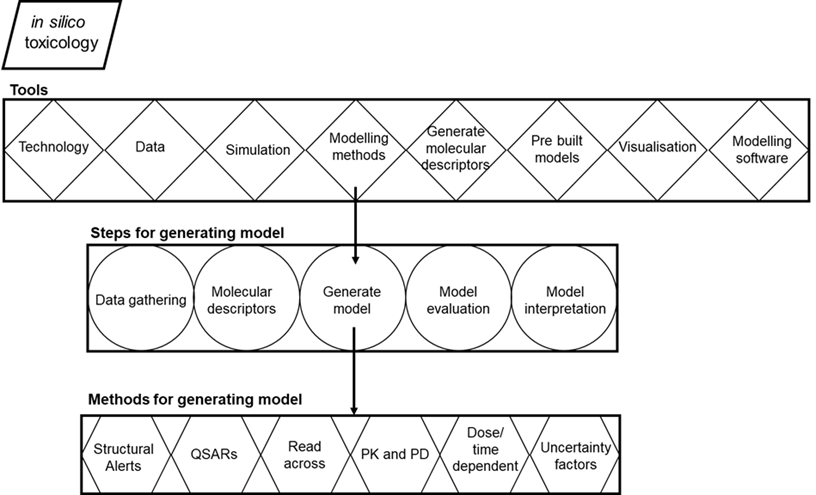

In silico toxicology encompasses a wide variety of computational tools (Figure 1): databases for storing data about chemicals, their toxicity, and chemical properties; software for generating molecular descriptors; simulation tools for systems biology and molecular dynamics; modelling methods for toxicity prediction; modelling tools such as statistical packages and software for generating prediction models; expert systems that include pre-built models in web servers or standalone applications for predicting toxicity; and visualization tools. In general, methods include the following steps while developing prediction models (Figure 1): gathering biological data that contain associations between chemicals and toxicity endpoints, calculating molecular descriptors of the chemicals, generating a prediction model, evaluating the accuracy of the model, and validation of the model (Patterson et al., 2020).

|

Key

|

Figure 1. Overview of in silico toxicology. Tools, steps for generating model and methods for generating model (Figure adopted from Raies and Bajic 2016).

Integrated Approaches to Testing and Assessment

Integrated approaches to testing and assessment (IATAs) provide a means by which all relevant and reliable existing information about a chemical can be used to answer a defined hazard characterization question. Information considered, can include toxicity data, exposure routes, use cases, and production volumes. This information is used to characterize outcomes that can inform regulatory decision-making.

The drawbacks of traditional toxicity testing approaches using laboratory animals may be overcome, by the use of human cell-based, biochemical, and/or computational methods to predict chemical toxicity. Due to the complexity of toxicity mechanisms, data from several methods usually need to be considered in combination to adequately predict toxic effects. IATAs provide a means by which these data can be considered in combination. When necessary, IATAs can guide generation of new data, preferably using non-animal approaches, to inform regulatory decision-making.

Previously

In 2009, the COT held a workshop on 21st century toxicology. The workshop addressed the United States (US) National Academy report called Toxicity Testing in the 21st Century: A Vision and a Strategy. The report called for accelerated development and adoption of human cell in vitro and in silico methods for the prediction of hazards, the determination of mechanistic information, and the integration of data.

Present - Why now?

As it is now halfway through the strategy period (10 years) it would be pertinent to review the current methodologies available whilst holding a workshop and to discuss their applicability in risk assessment including the current regulatory landscape.

FSA requirement for potency estimation / exploring dose response/ PBPK

The UK FSA have identified a need for potency estimation to aid in risk assessment.

This will be fundamental in risk assessment scenarios where limited to no information is available on the toxicity of a chemical.

When responding to food incidents the UK FSA regularly assess chemicals, particularly unauthorised novel food ingredients and sports/dietary supplements where there is very little toxicological information available, and it is not possible to provide meaningful valid risk advice to FSA Policy colleagues.

Background work

Background work was undertaken to help set the scene for the workshop and output. A scoping paper on Environmental, health and safety alternative testing strategies: Development of methods for potency estimation was presented to the COT in December 2019. The COT were provided with a concise review of currently available methods, which included databases, different kinds of QSAR methods, adverse outcome pathways (AOPs), High Throughput Screening (HTS), read-across models, molecular modelling approaches, machine learning, data mining, network analysis tools, and data analysis tools using artificial intelligence (AI) to inform the objectives of the workshop.