UK FSA COT Paving the way for a UK Roadmap-Development, Validation and Acceptance of New Approach Methodologies Workshop summary (2021)

Cover Page

In this guide

In this guideWorkshop Report

2021

Background and Objectives

In this guide

In this guide1. The future of food safety assessment of chemicals depends on our adaptability and flexibility whilst using the best scientific methodologies and strategies available in order to respond to the accelerating developments in science and technology. The vision is to be able to predict risk more rapidly and efficiently. In addition, it is about using the best science available and integrating innovative technologies into the chemical risk assessment process. This will be fundamental in the future for human and environmental safety.

2. Some of the current challenges faced by UK chemical risk assessors are: the large number of (groups of) chemicals that require assessment, lack of toxicological data on these chemicals and the speed, cost and ethical and moral considerations of traditional testing methods. One of the major recent scientific advancements is the development of New Approach Methodologies (NAMs) including but not limited to high throughput screening, omics and in silico computer modelling strategies (e.g., Artificial Intelligence (AI) and machine learning) for the evaluation of hazard and exposure. This also advocates the Replacement, Reduction and Refinement (3Rs) approach to animal testing.

3. NAMs are gaining traction as a systematic method to support the informed conclusions of chemical risk assessments.

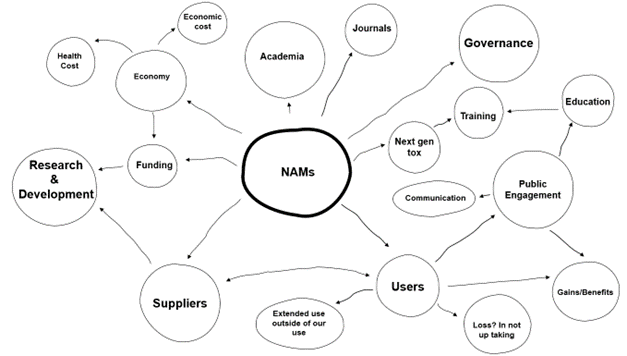

4. For regulatory agencies to incorporate and implement these new predictive capabilities, brings both challenges and opportunities. Moving from research to risk assessment to the regulatory setting and beyond, there must be appropriate validation and acceptance of these new and emerging technologies.

5. In order to achieve this, the UK Food Standards Agency (FSA) and Committee on Toxicity of Chemicals in Food, Consumer Products and the Environment (COT) are developing a UK roadmap towards acceptance and integration of these NAMs, including predictive toxicology methods using computer modelling, into safety and risk assessments for regulatory decision making.

6. A workshop was held online in October 2021 with the intention of gaining insights from a variety of perspectives to develop a UK Roadmap for NAMs in chemical risk assessment.

7. The background and objectives of the workshop were introduced to the participants.

8. Implementation of NAMs will not only require the historic 3Rs approach (i.e., replacement, reduction, and refinement of animal experiments) but also the expansion to the 6R principle: (to also include) reproducibility, relevance, and regulatory acceptance.

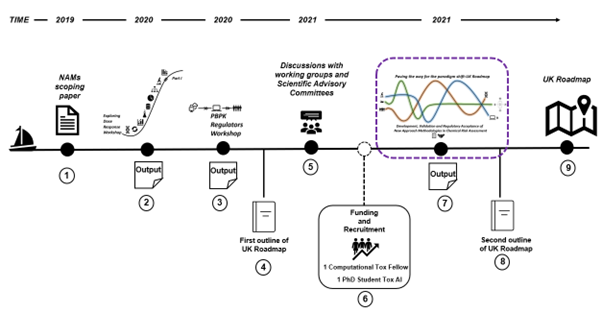

9. In support of this, the FSA and COT have produced a scoping paper on the available NAMs methodologies and strategies and organised three workshops (held in international, multidisciplinary settings and which included participation of attendees from regulatory agencies, government bodies, academia and industry). Furthermore, the FSA have funded a computational toxicology fellow at the University of Birmingham and a PhD Student (LIDo-TOX AI) in Kings College London (Figure 1).

10. The aim of the present workshop was to receive insights, comments and ideas from a wide variety of stakeholders and industry, academia and government, on the roadmap. This will allow it to be developed into a useful and engaging document that is beneficial to more than just the FSA and COT. This process would include a range of scientists, policy, and lawyers, will involve working in the international space and engaging with the public. Furthermore, the workshop should address issues such as: what is holding back the progress of NAMs being used in the regulatory space, including a range of areas such as socio-technical barriers and regulatory frameworks.

11. In order for us to create a roadmap that is inclusive and visionary we have taken into account a wide variety of opinions and ideas from different fields to ensure we capture all the views, opportunities and challenges that we may face in the integration of NAMs in chemical risk assessment.

12. Professor Alan Boobis (Imperial College London and Chair of the COT) further emphasised that this workshop is an opportunity to address what the Roadmap needs to include in order to help achieve the stated objectives and how do we get to where we want to be i.e., How do we gain acceptance of NAMs from risk assessors and regulators. Furthermore, how do we ensure that NAMs are fit for purpose.

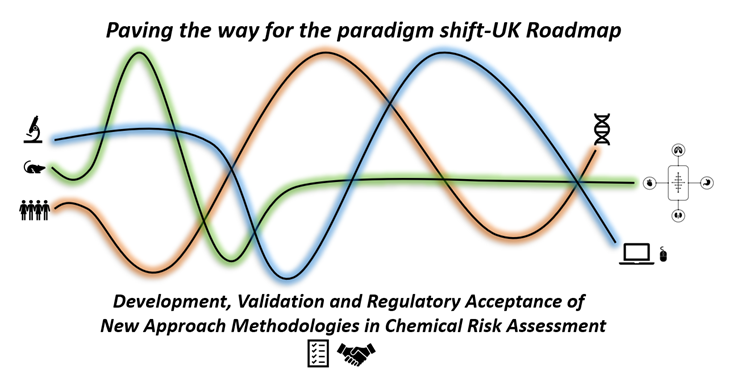

Figure 1 The roadmap journey 1) Scoping paper: Environmental, health and safety alternative testing strategies: Development of methods for potency estimation” (TOX/2019/70) was reviewed by the COT in December 2019. 2) Output of the Exploring Dose Response Workshop (March 2020). 3) Output of the PBPK Regulators Workshop (December 2020). 4) First outline of UK Roadmap. 5) Output of the discussions with working groups and scientific advisory committees (regular reviews). 6) Funded a computational toxicology fellow (University of Birmingham) and a PhD student in artificial intelligence (Kings College London). 7) Paving the way for the paradigm shift - UK Roadmap Development, Validation and Regulatory Acceptance of New Approach Methodologies in Chemical Risk Assessment workshop (current workshop). 8) Second outline of the UK Roadmap. 9) Finalisation of the UK Roadmap.

Overview

In this guide

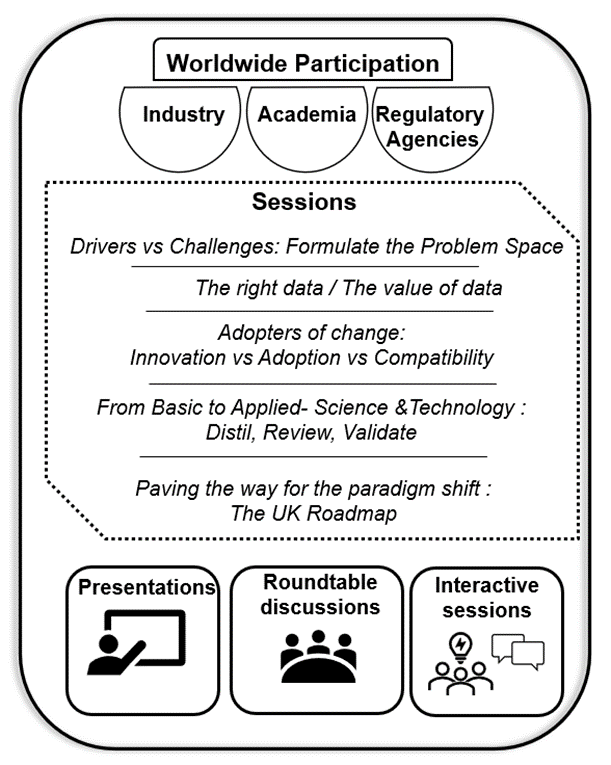

In this guide13. The workshop took place online on the 6th and 7th of October 2021 (Figure 2). It had worldwide participation with attendees from industry, academia, and regulatory agencies. The days were divided into different area sessions: Drivers vs Challenges: Formulate the Problem Space; The right data/the value of data; Adopters of change: Innovation vs Adoption vs Compatibility; From basic to Applied-Science & Technology: Distil, Review, Validate; Paving the way for the paradigm shift: The UK Roadmap. Each of the sessions had presentations followed by a roundtable discussion and included interactive sessions (Figure 3).

Figure 2. Workshop Logo.

Figure 3. Overview of workshop.

Day 1

In this guide

In this guideSessions Summary

14. Professor John Colbourne started the session with “The NAMs Space: Where are we at?” and presented on why this is the right time for NAMs and to look carefully at some opportunities in this space.

15. Following this presentation the discussion included the European Union (EU) Chemical Strategy (2020), highlighting the priorities and maximising the use of NAMs as well as consideration of the registration, evaluation, authorisation and restriction of chemicals (REACH) by 2022.

16. It was mentioned that the Environment Protection Agency (EPA) (USA) have made a commitment to ban animal testing by 2035 (EPA, 2019) and awarding $4.25 million to advance the research and development of alternative test methods for evaluating the safety of chemicals that will minimize, and hopefully eliminate, the need for animal testing.

17. EFSA also support the 3Rs principles (EFSA Alternatives to animal testing) as do the European Medical Agency (EMA) who recently implemented new measures to minimise animal testing during medicines development (EMA, 2019).

18. Since 2016, there has been an international regulatory government initiative, Accelerating the Pace of Chemical Risk Assessment (APCRA), whose aim is to promote collaboration and dialogue on the scientific and regulatory needs for the application and acceptance of NAMs in regulatory decision making. Case studies had been initiated following this first workshop; these as well as new case study proposals which are introduced on an ongoing basis.

19. The other initiatives are:

- Partnership for the assessment of risks from chemicals (PARC) are to develop next-generation chemical risk assessment in order to protect health and the environment.

- Animal-free Safety assessment of chemicals: Project cluster for Implementation of novel Strategies (ASPIS) which is a joint collaboration of the H2020 funded projects ONTOX, PrecisionTOX, RISK-HUNT3R and represents Europe’s €60 million effort towards the sustainable, animal-free and reliable chemical risk assessment of tomorrow.

20. It was discussed that it would be necessary to read between the lines and gain a clear sense of the time needed to look carefully at how the UK can progress in this space, especially since leaving the EU. The UK can learn from international agencies. The UK Innovation Strategy: leading the future by creating was used to emphasise how this field champions this.

21. Finally, it was agreed that this workshop is also testimony that change is happening.

Session I

In this guide

In this guideDrivers vs Challenges: Formulate the Problem Space

Professor Alan Boobis (Imperial College London) presented on “A framework for new approach methodologies for human health safety assessment”.

22. It was stated that there has been an increasing demand for non-animal testing methods and that 20 years ago the new in vitro approaches started to be developed, with a clear vision of the future. NAMs consider biological key events and their mechanistic underpinning. These initial methods were followed by in silico / in vitro approaches that study intermediate biochemical key events (trace the toxicological potential of chemicals) to support the accumulated knowledge of in vivo effects.

23. The challenge is to keep up with technology and innovation. There is a plethora of new methodologies published, however there is still no specific criteria for, or reliability of, these new methods. The uncertainty around these is still less than if used in a complex risk assessment. Specific criteria are needed for establishing/verifying fitness for purpose and even method performance.

24. NAMs must be assessed with specific goals, and guidelines for decision-making need to be provided/developed following 3 major steps:

(1) Problem formulation: context of use.

(2) Core criteria to be met: accuracy transparency.

(3) Specific criteria for methodologies: (chemical domain, base mechanism).

25. The general consensus is to gain confidence with predicting in a reliable manner with accurate transparency. There were discussions around the Parish et al (2020) paper and how to integrate NAMs into a framework like the Integrated Approaches to Testing and Assessment (IATAs).

Professor Mark Cronin (Liverpool John Moores University) presented on the “Development of read-across approaches that are acceptable for regulatory purposes.”

26. Read-across is the process of data gap filling for a data poor (or the target) substance(s) with information from similar data rich (the source) substance(s). It is often termed an analogue approach when a one-to-one read-across is performed, or a category approach when the data from many source substances are read across to the target. A variety of framework to perform read-across have been reported, with a harmonised framework presented by Patlewicz et al (2017). The harmonised framework intends to make read-across suitable for regulatory purposes and is based around seven steps that are common to all frameworks.

27. The seven steps in the harmonised framework can be further simplified into the need for:

- Problem formulation. This should define the purpose of the read-across (relating to regulatory requirements) and acceptable levels of uncertainty for the intended purpose. Preliminary knowledge to guide the read-across, e.g., the similarity hypothesis, should be identified, acknowledging that read-across will be specific to the substance and endpoint. There are many sources of guidance e.g., ECHA, OECD, and ECETOC etc to assist the user.

- Use of an appropriate similarity hypothesis. A justifiable similarity hypothesis is vital to a strong read-across argument. Frequently used similarity approaches include the use of structural, mode or mechanism of action-based analogues, common degradants or metabolites, measures of chemical similarity based on e.g., Tanimoto indices derived from molecular fingerprints, or biological similarity.

- Identification of suitable analogues and data. Computational tools such as the OECD Quantitative Structure Activity Relationship (QSAR) toolbox, AMBIT, ToxRead, GenRA, ChemTunes. ToxGPS will assist in identifying analogues, particularly those with potentially high-quality data.

- Assessment of the read-across including uncertainties. The read-across needs to be evaluated to ensure its robustness and justification. The European Chemicals Agency (ECHA) Read-Across Assessment Framework (ECHA RAAF) provides expert guidance in the assessment of a read-across for regulatory use. Uncertainties in read-across can be evaluated (e.g. Schultz et al., 2019) and have been shown to be reduced by inclusion of lines of evidence drawn from NAM data (e.g. Pestana et al., 2021).

- Appropriate documentation. For regulatory use, the read-across must be fully justified and described, requiring clear documentation. The documentation must be fit-for-purpose and is often based around suitable reporting templates which includes a description of the molecules (target / source), their properties and associated NAM data and other relevant information. The documentation should include a narrative justification of the read-across including an assessment of the similarity hypothesis and an evaluation of the data and relevant uncertainties. An example of how to perform and report read-across, suitable for regulatory purposes, is provided by ECHA.

Session I Roundtable discussion

28. Participants had heard about the drivers/aspirations to replace animal testing, and were asked what is the objective in the UK, and whether there was/should be a target date? How do we meet that date in terms of method development? Participants did not know if it will be a hard deadline.

29. The science has matured rapidly over the last 10-15 years. The science around NAMs is driven by the need to understand links between exposures and hazards. Fitting this in with regulation will require acceptance and compromise. There needs to be better dissemination of the science but also some enticement from regulators and industry to allow that to happen. Barriers need to be identified and addressed. There needs to be a better definition of NAMs, and there should be acknowledgement that the expectation can change. Do NAMs need to be predictive, or can they be indicative?

30. It was questioned whether better problem formulation was needed. We are faced with a range of problems. The question could be which of a number of congeners is the least toxic. In the absence of data for a chemical can we at least understand whether it is likely to be a major concern or of lower concern?

31. The ultimate aim is to protect the consumer from chemical hazards. If new data are available, they should be incorporated to improve the understanding of the biology. Up to this point, there has been a reliance on animal tests but there is a driver to change. Is it possible to get a better description of human biology than, for example, a rat model?

32. Participants went on to discuss how risk assessors and policy makers can be convinced that a new method is fit for purpose and provides value. Method development is funded, however method verification and validation is not. The next step needs investment. The National Centre for 3Rs (NC3Rs) does some good work trying to bridge the gap. The German government has funded method verification in a couple of cases.

33. In order to verify a method, it needs to be applied to large numbers of chemicals and establish what is missing. A deadline needs to be set, then milestones set over the next ten years to determine defined goals. What needs to be done should be determined, costed, and the investment made.

34. Case studies were highlighted as being important. The most convincing case studies are prospective, but these are difficult to do.

35. The OECD has considerable interest in NAMs: It approved a skin sensitisation method this year which includes a NAM; there is the adverse outcomes pathway (AOP) framework; and there is also a framework for recording omics data.

36. It was agreed that major funding was required and that regulators need to get together with scientists and innovators to discuss what tools they need.

37. It was pointed out that when there is the need to move on from in silico and in vitro methods to animal testing, more animal testing tends to be required. For example, based on genotoxicity testing results, regulators may then ask for another assay, or another tissue to be studied.

38. Public engagement is needed. The public tend to be against animal testing but also expect very high standards of consumer safety and thorough testing. The issue of uncertainty was raised, and how expectations are set. Case studies may show if the uncertainty is as amenable to rigour as we think it is.

39. If there are case studies, what is going to be the measure of success? There are not currently good benchmarks for many chemicals. Generally, we are looking for the results to not be greatly different from animal models; we want the models to be predictive of humans, but we do not have the data to say when we have been successful.

40. The question of trade-off was raised. What is the economic and public health benefit of making better decisions on more chemicals? Organisational inertia was also raised, and the risk averse nature of both regulators and scientific advisory panels/committees.

41. Training needs were discussed especially at a UK level. There are lots of specialisms within NAMs, and it is difficult to have all of these full represented within a regulatory body. One suggestion was to have a separate academic unit/centre, funded by government, to go into depth on all these methods and be available to be called upon by scientific advisory committees. Another participant agreed that there needs to be a group in the UK focussed on translational applied research.

42. It was noted that the UK is still taking part in EU Horizon programmes and training people, though this needs to be better consolidated in the UK. One observation was that next generation toxicologists in training tend to be very mechanistic but not so able to conduct risk assessments therefore bridging this gap is key. One participant was surprised there is no strategic priorities funding in this area.

Session II

In this guide

In this guideThe value of data and the right data

Dr Frederic Bois (Certara) presented on the “Replacement of animal experiments with a combination of innovative mathematical modelling and in vitro assays”

43. Previously, animal models have been used, with extrapolations that are not always well defined. Today, animal models are still considered gold standard because there are a lot of data from, and experience with them, and sometimes there are no human data. Therefore, often it is asked to validate a physiologically based pharmacokinetic (PBPK) modelling and simulation model using animal data. For example, checking fit and extrapolations across three animal species, which creates a lot of work.

44. There is now a consensus that to better predict toxicity in humans, human cells should be used. In this respect, more sophisticated in vitro systems (that use human cells) are being developed. However, because data provided by in vitro models cannot predict directly in vivo effects, models that can simulate toxicokinetics (TK) and toxicodynamics (TD) are also needed. PBPK/TD models, linking PBPK to quantitative AOP or systems toxicology models can be used in this instance, as they effectively describe the relationship between the in vitro concentration and the concentration in cells in vivo, and the subsequent effects. PBPK/TD models are mechanistic and therefore can be parameterised with in vitro data. Such models also have better extrapolation power than more empirical models. In vitro to in vivo extrapolations (IVIVE) works quite well in this context.

45. Hence there is a need to understand and model TK, TD, and their interplay. It is important to model TD because they can affect TK by feedback effects, for example in the liver where cytotoxicity can lead to reduced metabolism. However, there are several challenges to this, as there may be no obvious targets, and a chemical might have a mixed and complex mode of action. Cell co-culture assays and human-on-chip systems may help and there is a growing recognition that interactions between cells are very important. Although there is a lot of enthusiasm about this, it should be remembered that these are still in vitro systems which have limitations. Complex in vitro systems may pose ethical problems, such as determining when does consciousness emerge. Another challenge which is starting to be seriously tackled is the complexity of metabolic processes. However, understanding the fate and effects of metabolites even in vitro requires large analytical chemistry resources and assay development time.

46. Build generic PBPK/TD models for predicting toxicity faces other challenges:

i) even if we have defined the AOP and mechanism of action (MoA), it may be difficult to model these mathematically and to integrate the large amounts of omics data using current statistical approaches; and ii) understanding the details of TK and toxic effects requires many data and implies significant costs and logistics to organise.

47. In addition, regulatory agencies are starting to ask how to address variability in humans, instead of applying blanket safety factors. Assessing this variability is becoming possible with high-throughput in vitro systems but implies proper statistical analysis. It is a challenge to design an in vitro model which captures human variability. Relatively simple approaches, such as read across, QSAR, or small quantitative AOP models may also be useful. Within a properly defined domain of validity, they can be used to extrapolate measurements made in cells to humans, and eventually assess variability.

48. How to integrate omics and bioinformatics data? Systems biology models may be a viable answer to that challenge. They can model biochemical reactions, organelle, or cell response, up to tissue effects. Virtual organ models have been developed, such as the cardiac simulator developed by the US EPA, and those can be integrated into a complete virtual body model. There is an on-going virtual human (VPH) project funded by the Dutch government. However, this requires strong interactions between physiologists, biochemists, bioinformaticians and mathematicians, when those communities tend to work in isolation. However, the good news is that computational models are being integrated earlier in the design of research projects and can even be at the core of Research and Development projects.

49. What roadmap steps are needed to get regulatory acceptance as the scientific evidence emerges? Dr Bois discussed that regulatory agencies are duly cautious and do not want to miss unforeseen targets. All possible mechanisms of action should be investigated for any chemical. New methods and models can only partly address that need in specific areas. For now, a mix of standard screening tools, statistical, or empirical models and new approaches is needed for tiered data integration and analysis. As new methods become used in tandem with standard ones, their pros and cons can be understood, and confidence in the best ones should increase. There is a need for not only tiered risk assessment but also tiered model building and tiered data development and integration.

Dr Costanza Rovida (CAAT Europe) presented on “Internationalization of read-across as a validated new approach method (NAM) for regulatory toxicology”.

50. A workshop report, “Internalisation of read-across as a validated NAM for regulatory toxicology” was published in 2018 (Rovida et al., 2018) and had benefitted from participation of many people with different expertise and covered a wide variety of issues.

51. For confidence building there will need to be chemical and biological starting information for similarity assessment; NAMs and AOPs; Adsorption, Distribution, Metabolism and Excretion (ADME); Applicability Domain of Read across (RAx) flow; RAx for non-classified substances; Hazard characterisation and potency.

52. For good read across there needs to be an unambiguous algorithm. What is needed should be properly defined and be independent. There should be learning from OECD Principles, established for the validation of QSAR. There also needs to be A defined domain applicability, Good Lab Practice (GLP) principles, and mechanism interpretation that is justified.

53. From an international perspective of risk assessment there needs to be an awareness that there are different approaches worldwide and the risk assessment needs to be as reproducible as possible. An example of this is RiskHunt3R: a group that can support industry to understand risk assessment approaches.

54. There needs to be good communication and dialogue between regulators and industry. They both share the aim to make the world and environment safe. To reach that goal the dialogue needs to be open-mindedness to new ideas, eagerness to acquire new skills and direction. Education is not just for industry and regulators but teaching NAMs should be started at university and secondary school.

Professor Thomas Hartung (John Hopkins University) presented on “Toward a paradigm shift in toxicity testing to improve public health”

55. There is mounting pressure to move away from animal testing. There needs to be a move from discussion around ethics to reproducibility and quality of science. It is possible to adapt but how do will the change be made? Animal tests are still strongly overestimated in what they can do. There is not tremendous appetite to talk about the shortcomings of animal tests as these have been used for a long time. There needs to be an incentive to objectively assess what these methods can do.

56. Therefore, it is now key to try to explain what new methodology can and cannot do, and: accept the fact that they can outperform the animal methods. Algorithms have only recently become powerful enough to handle these types and size of data. Cell culture has advanced so much in the last 10 years, with the example of micro physiological systems.

57. These new alternatives are not just for regulatory use. They are frontloading for pharma, green toxicology and green chemistry testing strategies.

58. The big challenge is the need for good quality of reporting and results/validation and how data are handled and reviewed is important. This will be fundamental in evidence integration and defining each approach.

59. The roadblocks for a lot of these processes are often economic and legal as well as issues around validation. However, most of the change will likely come from politics.

Session II Roundtable discussion

60. A constant issue being raised is a lack of 'human data'. What can/needs to be done to improve the quality and availability of human data? The toxicological and clinical communities need to work together on this.

61. There are conversations around the word validation and what it means for NAMs in the regulatory space. Is there a possibility that synthesising the evidence and guidance on that, be an alternative way forward.

62. It was considered that there shouldn't be an under-estimation of the ability of people to understand and feel confident that they can accept more sophisticated methods and complex data. An example of this is the Benchmark Modelling approaches which have been around and accepted for more than 20 years.

63. Some participants discussed the classification of methods as NAMs is too broad for acceptance by regulators and those not familiar with them. Should methods be subdivided and adopted?

64. Suggestions around what might invalidate the data were also put forward. There will be a need to integrate these and consider them as uncertainties.

65. There are good scientific tools that are providing useful information, but they are expensive. Why should the regulator only rely on freeware? Discussions considered that currently, most regulators do not have the IT infrastructure to accept/handle the data. How many data do applicants have to share with the regulators e.g., when submitting PBPK data/models, does the raw code need to be provided? Do contract research organization (CROs) need to work with regulators to be able to reproduce the data. Regulators need to be ready to receive the data straight away and be able to respond.

Session III

In this guide

In this guideAdopters of change Innovation vs Adoption vs Compatibility

Dr Fiona Sewell (NC3Rs) presented on “International regulatory acceptance of non-animal methodology in safety assessment”.

66. The National Centre for 3Rs has a large toxicology and regulatory sciences programme. The NC3Rs have a vision to apply the 3Rs in the current regulatory framework and provide funding including through CRACK IT Challenges.

67. Lessons learnt included the need to exploit the latest science, existing data, collaboration, safe-harbour approaches, and regulator buy-in.

68. Global harmonisation will be key, especially with different regulatory requirements that can cause 3Rs issues regarding testing needs. Inherently, there will be variation in interpretation of the guidance. OECD test guidelines do exist to help align this and more importantly some regulators are open to alternatives. However, requirements and needs can be confusing, often a risk averse approach.

69. There was discussion on work that needs to be done including exploring how animals do not always provide the best models.

70. There needs to be a bigger focus on drivers and incentives with a need for international buy-in. This will be a stepwise process to build confidence in NAMs and provide evidence and be clear about what questions need to be answered.

71. Science and technological innovation are crowded and moving fast. Which NAMs should be invested in? Which NAMs need to be included in decision making? Time pressures and funding are challenges with implementing methods.

72. Now is the time for a culture change to allow regulators to move forward from a reliance on animal testing.

Dr Ans Punt (Wageningen University) presented on “Moving the paradigm shift in toxicology towards an animal-free chemical risk assessment”.

73. Dr Punt introduced NAMs at Wageningen Food Safety Research (WFSR) and how Quantitative in vitro to in vivo Extrapolation (QIVIVE) and in vitro PBPK are used for predicting safety of chemicals in in feed and food.

74. Regulatory acceptance of these models may be easier than other areas such as PBPK models. It is better to have some information rather than none at all.

75. The challenges of regulatory acceptance can be seen for example when EFSA evaluated coumarin and established the TDI. Was this risk assessment too strict or is coumarin in cinnamon a real concern?

76. For example, the bioactivation route and detoxification routes were assessed in vitro including the measurement of the conversion of coumarin to its metabolites. However, rats do not have a detoxification route, but humans do. Humans are less sensitive to this than rats. EFSA was enthusiastic about the model but had not accepted it because it was not validated as no human data were available. If we do not have data available to validate these models, it will be difficult to get a model accepted. In order to get a model accepted there would need to be a refinement of the risk assessment. The key point is how are models going to get to a point whereby they are accepted for more involved and important decisions.

77. The 2017 Lorentz Centre Workshop was an important output (Punt et al., 2020). It discussed the OECD guidance documents and established OECD guidelines. However, there is no risk assessment framework that requires the submission of quantitative in vitro kinetic data.

78. It was suggested that there should be a survey of experts in food safety to discuss the important drivers/barriers of the use 3Rs in safety evaluations and their predictability.

79. One of the ways to gain confidence in PK predictions without 1:1 in vivo data is to determine the key aspects is to determine what is good quality of data. If we study the quality of in vitro data, the values for a range of chemicals including clearance measurement and complex kinetic processes will therefore reduce a large variation in output.

80. Evaluating PBPK model predictions can show large variations in the Cmax values because of different input approaches. Therefore, it is important to know about the characteristics of the chemicals that are over-predicted.

81. Finally, PBPK modelling will play a crucial role in the validation and acceptance of NAMs.

Dr Patience Browne (OECD) presented on “Regulatory use and acceptance of alternative methods for chemical risk assessment”.

82. Dr Browne introduced the OECD Chemical Programme and various initiatives develop to increase the uptake of New Approach Methods (NAMs) and reduce in vivo testing.

83. The OECD Test Guidelines Programme publishes internationally harmonised method for evaluating chemical hazards, the results of which, when conducted following the principles of Good Laboratory Practice (GLP) are covered by the agreement on Mutual Acceptance of Data (MAD). MAD is a legally binding agreement between OECD countries which requires all countries to accept the results from the OECD Test Guidelines if they have the requirement for such data. Thus, MAD vastly reduces duplicative chemical safety testing and saves tens of thousands of animals and hundreds of millions of euros each year. There are more than 35 internationally harmonised NAMs include in OECD Test Guidelines.

84. The OECD was an early adopter of the Adverse Outcome Pathway (AOP) framework and has heavily invested in the AOP Knowledge Base and various Guidance Documents on the development and review of AOPs. When initially developed, AOPs were intended to be a tool to develop NAMs including Integrated Approaches to Testing and Assessment (IATAs). OECD Guidance Document number 260 provides information on the use of AOPs for developing IATAs. The publication of this document was coincident with the OECD Hazard Assessment Programme’s IATA Case Studies Project that uses innovative approaches to address various endpoints for a specific regulatory context. Case Studies are submitted, peer-reviewed, and revised on an annual cycle and final versions are publicly available through OECD websites. In addition to the case studies, a “Considerations” document is updated annual and evaluates the lessons learned for all case studies in the annual cycle and submitted. The IATA project and it is now in its 7th review cycle. Eight case studies in the review process are focus on developmental neurotoxicity, inhalation toxicity, and the use of transcriptomics to evaluate endocrine disruptors.

85. The IATA is intended to be flexible and use expert judgement. As decisions become less flexible, IATAs move towards “Defined Approaches”, which are rules-based, quite structured and do not use expert judgment. Different individuals using a Defined Approach should come to the same conclusion. Defined Approaches may be suitable for including in OECD Test Guidelines, for example, Test Guideline 496 describing Defined Approaches for Skin Sensitisation, which was published in 2021.

86. The OECD Chemical Safety Programme is continuing to evaluate how NAMs and IATAs can be used for regulatory decisions on the safety of chemicals. The results of regulatory assessment methods must be reproducible; the test system must be relevant to the target species (e.g., humans), and performance of the NAM must be as good or better than the current, usually in vivo, method. Consolidation of toxicological data in large databases have allowed for meta-analyses and led to increasing recognition on the limitation of the reproducibility of the animal test methods and the relevance of animal models to human health outcomes.

87. The OECD is working to prove non-animal methods are just as useful, by using a global datasphere that incorporates 90% of the data in the world that was generated in the last 2 years.

88. The OECD is also developing tools to facilitate sharing of toxicological data. OECD Harmonised Templates are designed to capture chemical hazard data and are compatible with housing information in structured databases. This database is the centre of an electronic ecosystem, and compatible with a variety of internal tools such as the AOP Knowledgebase and OECD QSAR Toolbox, and interoperable with third-party electronic tools. As we move forward, the intention is to ensure the OECD electronic ecosystem can facilitate data sharing, help to develop new NAMs, and reduce the need for generation of new animal data on chemical hazards.

Dr Harvey Clewell presented on “Development of methods for in vitro to in vivo extrapolation of cell-based toxicity assays to inform risk assessment”.

89. In Vitro to In Vivo Extrapolation (IVIVE): In vitro toxicity assay results expressed in terms of media concentrations converted to the equivalent in vivo doses using in vitro metabolism data to estimate in vivo clearance of the tested chemical (Yoon et al., 2012). In a typical tiered risk assessment approach, three different IVIVE approaches are required, consistent with the experimental data being extrapolated (Andersen et al., 2019):

- Tier 0 – exposure estimation, QSAR, read across.

- Tier 1 - high throughput assays (HT-IVIVE).

- Tier 2 - fit for purpose assays (Q-IVIVE).

- Tier 3 – targeted animal testing (PBPK-IVIVE).

90. High-throughput IVIVE uses in vitro data on the rate of metabolism and blood binding of the compound at low-concentrations to estimate the equivalent dose based solely on hepatic and renal clearance. Quantitative IVIVE attempts to incorporate more complete information on the dose-dependence of metabolism and binding, both in the in vitro toxicity assay and in vivo. Finally, PBPK-IVIVE models can be used to incorporate differences in physiology and metabolism in order to extrapolate accurately from animal to human.

91. In the early days of in vitro toxicity testing, only the in vitro bioactivity (effective concentration) was considered when trying to predict relative potency in vivo. Unfortunately, the most potent compounds in the in vitro studies were not the most potent compounds in vivo. This discrepancy was largely due to a failure to consider differences in the in vivo clearance; for example, if a compound that had a higher intrinsic potency (in terms of cellular concentration) was eliminated from the body more rapidly, it might not have affects at a lower dose (mg/kg/d) as expected. A joint study by The Hamner Institutes for Health Sciences and the US EPA ToxCast program first demonstrated the necessity of including IVIVE in chemical prioritization (Rotroff et al., 2014). This study demonstrated that the equivalent in vivo doses for two compounds producing effects at the same in vitro concentrations could differ by several orders of magnitude due to differences in their in vivo clearance. The US EPA has since always used the IVIVE approach for high-throughput screening in the 2nd tier, using a conservative approach. For example, there are two common assumptions made concerning hepatic clearance, restrictive or non-restrictive, that make a big difference for some chemicals (Yoon et al., 2012); the non-restrictive clearance assumption typically works better for environmental chemicals, but the US EPA assumes restrictive clearance because it results in a lower (more health conservative) Human Equivalent Dose (HED).

92. In PBPK models, IVIVE can be used calculate the in vivo model metabolism parameters based on the protein content of the metabolizing cells and number of cells. One example of a PBPK platform that uses IVIVE is Simcyp. This platform is well accepted by the pharmaceutical industry and regulatory agencies. Simcyp was developed for the pharmaceutical industry and is proprietary software, but the open-source platform, PK-Sim, provides similar capabilities and is also suitable for some environmental or personal care compounds. However, it is important to recognize that environmental chemicals typically do not possess the characteristics of a “druggable” compound, which include poorly metabolised, non-volatile, and not highly lipophilic. They also often are associated with more complex metabolism, particularly by cytochrome P450s (CYPs). Clearly, a lot of work needs to be done in developing PBPK modelling platforms for broader application to environmental chemicals (Moreau et al., 2022). In silico predictions of intrinsic clearance for environmental compounds are not yet reliable, and more development is needed for in vitro assays of metabolism for slowly cleared chemicals, volatiles, and lipophilic compounds.

93. Ultimately it will be possible to use IVIVE at all stages of the regulatory decision-making process, but active participation by regulators to help develop the necessary methods and modelling platforms will be required. The EU Joint Research Centre and the US EPA Center for Computational Toxicology and Exposure have been leading this effort to date.

Session III Roundtable discussion

94. A participant raised the point that the OECD is made up of 36 countries but what about other countries? What is their viewpoint? and what does this mean?

95. Rather than measure the variability in kinetics it might be better to simulate it. Simcyp does a good job of this. If using in vitro approaches which are not primary cells, then expression in the cells should be considered. A continuum, more of a tiered approach was suggested. For a lower tier decision, it may be possible to use a simple PK model. It is possible to increase the complexity to match the uncertainty which would be allowed within the constraints of the model. It should be remembered that there are other contributors besides genetics and the type of cells e.g., lifestyles/diets.

96. A participant raised whether the UK’s appetite for risk is poor. Should risk managers be asking about a level that is safe, or should they be asking where it becomes dangerous or what level of protection should there be?

97. Even though the public have a diverse view, the usual consensus moves away from animal testing usage, if it can be helped, without compromising high level safety. Making the public aware of the uncertainty in animal data could help towards the acceptance of uncertainty in NAMs. The discussions that have taken place over COVID vaccines and the science/public media reactions to this should be considered. Linking back to the issue of: How much confidence is there that what there is, is fit for purpose and how can confidence be improved in approaches that are going to replace animals. There will be a reluctance to increasing TDIs.

98. There has already been marshalling of resources and generally there is a reluctance to say that chemicals are safer than originally thought. Examples included trying to use chemical-specific adjustment factors (CSAFs). With human data, there is a lot of resistance to removing the factor of 100. However, if human specific in reduction safety factors would be appropriate. Would there be resistance to it not being a factor of 100?

99. Problem formulation is about asking the right questions. Is it safe? When does it become problematic? And what impact have case studies had on acceptability of these approaches.

100. The need for a roadmap for the UK is timely and fundamental to start getting NAMs accepted by regulators. It needs a clear vision and steps, stakeholders, funding, timeline, and an assessment of what needs to change in law to meet the data requirements. What does validation mean or what are meaningful results? NAMS need to be accepted by regulators by getting to break down any barriers, through funding and the inception to translation.

Day 2

In this guide

In this guideAn overview was given to remind participants what had been covered on Day 1.

Professor Rusty Thomas (EPA) presented on “The Changing Toxicology Landscape: Challenges and Innovations to Adapt”.

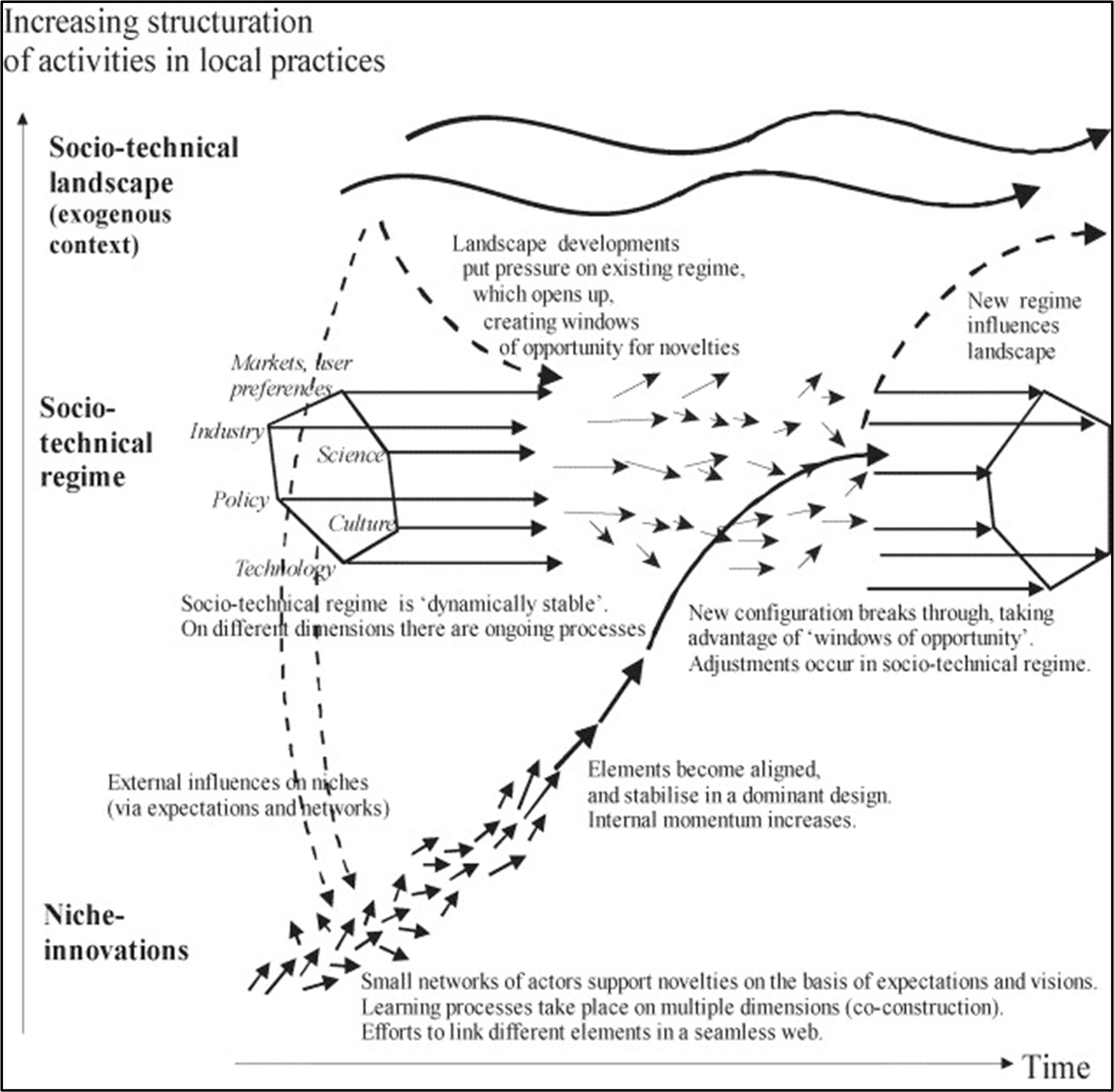

101. The nature of the discussion has changed over time since the release of Toxicity testing in the 21st century: a vision and a strategy (National Research Council, 2007).

102. The nature of the discussion has changed over time since the release of Toxicity testing in the 21st century: a vision and a strategy (National Research Council, 2007).

103. There have been different roadmaps, and these are a good tool to force prospective thinking as an organisation and can help implement NAMs in the regulatory space. At the EPA, they have developed several roadmaps that cover the development and implementation of NAMs in multiple contexts. These include the development and application of NAMs at the Agency level (EPA NAMs Work Plan), application under specific regulatory statutes such as TSCA (TSCA Alternatives Strategic Plan), and strategic research on NAMs (CompTox Blueprint).

104. The landscape of toxicology is changing in multiple ways in order to apply NAMs in regulatory decision making. These changes include:

- Systematically addressing the limitations of current NAMs.

- Accepting that there is likely not a primary mechanism/mode of action for most environmental/industrial chemicals.

- Working through how to assemble NAMs in a coherent, practical, fit for purpose testing framework.

- Understanding how to benchmark new approaches.

- Evaluating protection vs. prediction in our current and future approaches.

- Developing a flexible and fit for purpose validation/confidence framework to evaluating new approaches.

- Quantifying public health and economic trade-offs of testing more chemicals/faster.

105. In the first area of change, there needs to be an acknowledgement that while there are technical challenges associated with NAMs (e.g., black-box predictions and limited chemical domain applicability), there needs to be a concerted and systematic research initiatives to overcome them. For example, many in vitro systems have limited coverage of important cellular and intracellular processes compared to a whole animal system. To address this limitation, high content profiling systems such as transcriptomics and imaging systems are being developed and applied across multiple cell types to comprehensively evaluate chemical interactions over broad biological space. In another example, there have been a number of improvements in in vitro exposure systems allow high-throughput testing of volatile chemicals and aerosols in concentration response.

106. In the second area of change, the community needs to acknowledge that most chemicals interact with biological systems in a non-selective manner where multiple biological pathways and processes are impacted in a narrow dose/concentration range. The non-selective nature of chemicals on biological systems impacts how we think about mode of action and test chemicals using NAMs.

107. The third area of change follows on the last one. We need to develop a fit-for-purpose toxicological testing framework that is consistent with the lack of biological selectivity of chemicals. For most chemicals we may not be able to identify specific mechanisms of action due to the lack of selectivity and we would need to use general biological activity to derive a point-of-departure. For those few that are selective, we can identify their primary molecular and cellular targets and link them to key events associated with their toxicity using the AOP framework to predict potential organ and tissue effects more accurately.

108. In the fourth area, we need to better understand how to benchmark NAMs. Comparing with existing models may not always be appropriate if the current models don’t predict human toxicity; however, historically we have compared results with NAMs with our traditional animal studies. But, in order to do that, we need to characterize the variability of our existing models. Using databases of curated legacy toxicity studies, we have estimated that LOAEL values from repeat dose studies can vary +/- 10-fold. Similarly, we have curated time course in vivo toxicokinetic studies to help set expectations for in silico and in vitro-to-in vivo extrapolation methods.

109. In the fifth area of change, the community needs to further discuss the issue of protection versus prediction for NAMs as well as our existing practices. In evaluating the concordance of rodent and human toxicological in pre-clinical and clinical pharmaceutical studies, rodents show limited ability to accurately predict the specific toxicological response in humans, but they are able to more robustly predict the absence of toxicological response. Current risk assessment practices are generally consistent with these observations given that we typically identify the most sensitive response that is considered adverse and use that to calculate a point-of-departure and derive a protective toxicity value. For NAMs, a similar approach could be taken, and this was highlighted in a case study performed by Katie Paul-Friedman where she compared the results from bioactivity in the ToxCast battery with in vivo points-of-departure from repeat dose animal studies. For ~90% of the chemicals, in vitro bioactivity was generally protective of in vivo toxicological responses. On average, it was 100-fold protective. In addition to the ToxCast battery of assays, similar results have been demonstrated using biological activity in high-content imaging assays.

110. In the sixth area of change, we need to develop fit-for-purpose validation and scientific confidence frameworks that allow the community to develop the appropriate confidence in NAMs for regulatory application in a more timely and sustainable way. The EPA are planning to deliver an EPA scientific confidence framework to evaluate the quality, reliability, and relevance of NAMs for our decision making in 2024.

111. In the seventh area of change, the community needs to evaluate the public health trade-offs of uncertainty, timeliness, and costs associated with different toxicity testing methods. If there are two toxicity testing methodologies available and one is more uncertain but gets the answer 5 times faster, what is the trade-off? In our analysis, the timeliness of toxicity testing results has at least as big, and in many cases bigger, impact on public health than uncertainty. In other words, knowing the results of a toxicity test sooner is more important than the degree of certainty in those test results. This has important implications for NAMs as many of them are quicker but are perceived to be more uncertainty compared to the slower traditional animal tests.

112. Finally, one of the biggest challenges facing the toxicological landscape is organisational inertia. In many regulatory agencies, if the wrong decision is made using a new method there is a significant downside, but if a new method is used, it will not always widely publicised. For environmental and industrial chemicals, the right answer is not always known immediately, if at all.

Session IV

In this guide

In this guideFrom Basic to Applied- Science &Technology Distil, Review, Validate

Professor Mark Viant (University of Birmingham) presented on “The use of case studies, best practice and reporting standards for metabolomics in regulatory toxicology”.

113. Developing and applying metabolomics in toxicology (and more recently in regulatory toxicology), NAMs need to demonstrate their relevance and reliability (including laboratory reproducibility), with transparent reporting. At EUROTOX 2021, there was emphasis on strengthening read-across using omics derived evidence from an invertebrate model.

114. The relevance of metabolomics in the context of toxicology is fundamental when looking at metabolites that are key event biomarkers of adverse effects, e.g., changes in glutathione concentration due to oxidative stress and ornithine/cystine ratio for predicting in vitro developmental toxicology. The importance of targeted measurements of toxicologically relevant metabolites was highlighted, along with the detection and reporting of unknown ‘features’ within mass spectrometry data sets to grow new knowledge of metabolic key event biomarkers.

115. There is a need to define which metabolites should be routinely targeted when using metabolomics in a toxicology context. For example, there are advantages in using a smaller library ‘panel’ where both the toxicological relevance of the metabolites is known as well as their analytical characteristics (e.g., chromatography retention times, MS-MS conditions, linearity of response), rather than a solely untargeted approach. An example of such a strategy was provided from the transcriptomic area: S1500+, in which a library panel of ca. 2000 genes has been determined to show high toxicological relevance.

116. Inspired by the S1500+ study, and by the urgent need to defragment information sources describing metabolic key event biomarkers, a recent collaboration between Professor Viant's group and ECHA was presented in which a ‘panel’ of 722 metabolites (‘MToX700+’) has been developed (Sostare et al., 2022. These metabolic biomarkers are all known, through previous studies, to be associated with disease outcomes, toxicity and/or adverse effects.

117. The important issue of reporting metabolomics results consistently was raised. A paper from Nature Communications was introduced that described the outputs from MERIT (Metabolomics Standards Initiative in Toxicology), a 2-year project with industry, government agencies and academia (Viant et al., 2022). Additionally, recent progress by the OECD was described, towards developing both metabolomics and transcriptomics reporting frameworks. This was a good example of where two communities worked together to provide a single framework – the OECD Omics Reporting Framework (Harrill et al., 2021). Consistent reporting is key and has proven a significant roadblock to being able to move omics forwards within the NAM space.

118. An on-going project that is addressing the reproducibility question in metabolomics is the MetAbolomics Trial for CHemical Grouping (MATCHING) project. This is an international ring trial involving 7 institutes (from academia, government, and the private sector), where each partner is measuring, analysing, and drawing conclusions from a single batch of biological samples from a chemical grouping study. The aim of the project is to determine the reproducibility of metabolomics, from a toxicology perspective by assessing the extent to which partners derive the same chemical groups using only metabolomics data.

119. Finally, the “inadvertent” advantage of using full scan mass spectrometry techniques for metabolomics, was discussed, specifically that in addition to the intended measurement of endogenous metabolites, this approach can also detect xenobiotic compounds (e.g., a drug or chemical contaminant exposure) within the same analysis. This includes biotransformation products of the parent xenobiotic compounds. While research to more fully evaluate this is on-going, early findings suggest it is possible to extract information for ADME and TK purposes as well as obtaining the ‘classical’ endogenous metabolomics information from the same sample using the same assay.

120. In conclusion, Professor Viant’s overall thoughts were that it is no longer an if, but when, these NAMs approaches are taken up more routinely for toxicology regulatory purposes.

121. At the end of the presentation Arthur de Carvalho e Silva was introduced as a new 4-year postdoctoral research fellow in computational toxicology working jointly with the University of Birmingham, HSE and FSA in the NAMs area.

Professor John Colbourne (University of Birmingham) presented on “Precision Tox Project”.

122. The Precision Tox project is a new approach, that is UK led, and involves investigators from 15 organisations across 8 countries, and it is supported by the EU’s Horizon 2020 programme. The objective is to show that it can deliver biomolecular key events and their biomarkers, which are necessary for AOPs. The purpose is to attempt to repair the division between human and environmental risk assessment, showing that a unified approach is possible.

123. Current toxicological models are based on analogy, whereas the homology approach is based on similarities shared by evolutionary history in genomes. The project is testing the idea of toxicity by descent, and conserved toxicity pathways, using a suite of species. These include the arthropods and nematodes representing the invertebrates and Xenopus embryos, zebrafish embryos and human cells representing the vertebrates. Seventy-one percent of gene-disease families evolved from ancestors of both invertebrates and vertebrates.

124. The proof of principle was tested using a PPAR agonist. Mice have many of the human genes except one, and all of the nuclear receptors. Xenopus have one nuclear receptor missing. Zebrafish have all the nuclear receptors but miss a few proteins and genes. The PPAR agonist causes liver fibrosis in all these species. Daphnia have only one of the nuclear receptors, a few genes, and no liver. However, they showed a similar gene response to mice, so despite having no liver the same pathway was activated.

125. Another aspect is genetic sources of variation in humans resulting in differences in susceptibility. The project is mapping genetic variation in the human population in genes which affect susceptibility levels.

126. The project is conducting case studies with the EU’s Joint Research Centre (JRC) and EU/UK regulatory agencies. The approach can help industry to innovate responsibly. Stakeholders have input into the roadmap. The project will hopefully lead to fewer toxic chemicals in the environment.

Dr Stuart Creton (Food Standards Australia New Zealand) presented on “NAMs in food chemical risk assessment: small agency perspectives”.

127. Food Standards Australia New Zealand (FSANZ) develops standards that regulate the use of ingredients, processing aids, colourings, additives, vitamins, and minerals. They also cover food composition, novel foods and new food technologies (e.g., GM) and labelling requirements.

128. In toxicology, FSANZ follows Codex guidelines for risk assessment purposes in line with EHC240. They look to harmonise proposed HBGVs with a JECFA opinion where appropriate. They use local consumption dietary survey data (national nutrition surveys) for their own country specific risk assessments. Dr Creton’s team also provide risk assessments for imported food.

129. The use of NAMs in toxicology and the FSANZ risk assessment processes are still very limited. If used, then examples have been in low-risk issues or use as a screening/prioritisation tool.

130. Examples of the use of NAMs by FSANZ have included the use of the Threshold of Toxicological Concern (TTC) (in the absence of chemical-specific toxicity data) in food contact material assessments and using PBPK modelling for PFAS for predicting human equivalent doses from animal data.

131. Another example was looking at the food additive potassium polyaspartate in wine. NAMs were used to decrease the number of animal tests required for carcinogenicity and developmental and reproductive toxicology (DART) assessments.

132. Dr Creton described his team and available resource as small with a high volume of assessments that need to be completed within statutory time frames. FSANZ has limited capacity to work on NAMs research themselves. However, they were keen to track and keep pace in this area.

Session IV Roundtable discussion

133. Participants discussed hypothetically if they had a new chemical, and wanted to know if it will be safe to use as a food ingredient, what would be done first?

134. It was suggested that use should be made of omics approaches (more for molecular changes), and phenotypic profiling approaches (i.e., ‘in vitro pathology’). Scientists that work with partners in TOX21 ask ‘what complement of different cell types represents the broadest diversity of biological space’?

135. There are also a series of reference chemicals being assayed that are selective against different (known) biological targets (e.g., receptors, enzymes), and building a database of profiles that are indicative of specific MIEs (molecular initiating events). Therefore, when a screen is conducted with unknown compounds, it is possible to see whether the compound is 1) a selective/specific toxicant, or 2) a non-selective/broad toxicant.

136. A participant said it was more of a challenge for metabolomics (less so for transcriptomics). When mass spectrometry is applied to a biological sample, there are lots of unknown peaks, but presumably they represent endogenous metabolites.

137. At Badische Anilin- und SodaFabrik (BASF), unknown features are included (<10% of total features) because they are still predictive. But how does the regulator view this information, particularly if peaks change in a dose-response manner?

138. Therefore, levels of confidence in metabolite identification need to be considered. What level of confidence should be used? Is it possible that the unknown peaks could be used in regulatory decision making? But then does the mass spectrometry approach become more targeted?

139. The Regulator’s perception tends to be that lots of uncertainty is involved in the application of omics data. The OECD’s reporting framework helps to make sure it is clear what has been done. There needs to be more guidance documentation, e.g., making sure that data are reproducible. Regulators are looking for more case studies for additional confidence for when using omics data.

140. From a scientific perspective, the technical challenges of omics technologies are being addressed. However, there is also the need to characterise uncertainties in the current model. There also needs to be evaluation of the approaches within the community. Are we using these approaches for health protection, or prediction? Someone needs to start applying this in a proper decision-making context.

141. There will be major computational challenges from a regulator’s perspective.

Session V

In this guide

In this guidePaving the way for the paradigm shift The UK Roadmap

Dr Camilla Alexander White presented on “Next generation risk assessment (NGRA) of systemic toxicity effects”.

142. There is increased interest from the UK on fundamental principles and paradigms of future regulations since EU exit and there is more discussion on the interpretation of the precautionary principle.

143. The UK chemical strategy was last published in 1999. In 2021, The Royal Society of Chemistry published Drivers and scope for a UK chemicals framework.

144. Controversy remains around the hazards vs risk paradigm and different codified hazards. However, there will be more controversy regarding the use of NAMs.

145. Endocrine disrupting chemicals for example were reviewed towards the end of 2019. There was a unanimous vote that a risk-based approach would be the most appropriate for endocrine disrupting chemicals.

146. Should there be a move into risk-based regulation? Therefore, the centre of decision making should be a “protection not prediction” philosophy. These decisions should be transparent and open to scrutiny. Science advisory groups/Committees are fundamental to some of this shift in culture (such as reviewing new content in future dossiers using NAMs and NGRA). Risk is a function of hazard and exposure and is still a function of NAMs.

147. The principles of NGRA were discussed. These principles underpin the use of new methodologies in the risk assessment of cosmetic ingredients.

148. There are 9 principles in cosmetics with the goal of human safety were discussed. The 9 principles of NGRA for cosmetics regulation (ICCR, 2018) could be drawn as a parallel for the principles of all NAMs:

1. Overall goal human safety assessment.

2. Assessment is exposure-led.

3. Assessment is hypothesis driven.

4. Assessment is designed to prevent harm.

5. Assessment follows an appropriate appraisal of all existing information.

6. Assessment uses a tiered and iterative approach.

7. Assessment uses robust and relevant methods and strategies.

8. Sources of uncertainty should be characterised and documented.

9. The logic of the approach should be transparently and explicitly documented.

149. A tiered framework for exposure assessment: deterministic, probable, and specific models (refinement to include biomonitoring) should be used.

150. Levels of confidence and uncertainty are also defined amongst the principles (ICCR-2018).

151. There is a 10-step framework and the use of read-across in NGRA. Caffeine has been used as a model substance. There is three times more work involved compared to a standard risk assessment or dossier. The framework starts with exposure and uses deterministic exposure assessment using the tiered exposure assessment.

Different types of tiers include:

• Tier 1 Scenario A Deterministic.

• Tier 2+ Scenario A Probabilistic.

• Tier 1 Scenario B Deterministic.

• Tier 2+ Scenario B Probabilistic.

152. The higher tiers are used to assess biological activity using gene profiling and/or oestrogen receptor activity.

153. For the UK Roadmap, one of today’s challenges can be solved by developing new relevant skills of the next generation of scientists. More investment will be needed, upscaling and new research, particularly in the fields in exposure assessment if the UK were to be world leaders in risk-based regulations, in particular NGRA. There needs to be investment in NAMs technologies for NGRA (i.e., Characterise relevant hazard potencies). More case studies should be developed and collate the data to see how they are working. Social science will play a role, in terms of risk communication that will need to be considered. A global open-source data should be created. Would there need to be a new agency with a research arm. The UK roadmap is important for cross-department wide, but it’s more important for the broader perspectives than just FSA/COT. It should be used to collaborate globally.

154. Finally, develop a framework to protect not predict.

Professor Robert Lee presented on “Regulatory law issues in human health and environment”.

155. Professor Lee outlined the functions of the FSA as outlined in the Food Standards Act, including to protect public health from risks; establish advisory committees; develop policy; provide advice, information or assistance to other agencies such as local authorities; and to monitor developments in science, technology and other areas. But the FSA also has objectives, which Professor Lee described as soft law elements rather than hard law, which include providing guidance and the minuted decisions of advisory committees.

156. The objectives are written into strategic programmes. The FSA aims to define and bring on stream programmes of work on date and new technologies. Failing to meet objectives can be subject to judicial review.

157. The FSA also has powers but has to take into account the nature and magnitude of risks to public health and has to also take into account uncertainty, the advice of scientific advisory committees and to consider costs versus benefits.

158. There is little in hard law that stops the development of NAMs. The soft laws could be aligned with NAMs.

159. The advantages of NAMs appear to be that they are faster, cheaper, ethical, mechanistic, and protective to some degree. They look better placed to address data gaps, yet their formal adoption in regulations is limited.

160. Professor Lee moved on to discuss socio-technical barriers. The “N” in NAMs stands for new, so how many scientists in regulatory agencies will have had an involvement in NAMs if they were in higher education more than 20 years ago? In regulatory cultures there is stasis. Making errors costs, which engenders caution. However, if we do not begin to adopt NAMs then the barriers will remain as there won’t be the development, validation, and acceptance in regulations.

161. This will require a significant building of networks. The roadmap reflects well-known processes. Training is fundamental for the regulatory community, but the culture needs to be considered too. The place for NAMs is within the soft law areas. Professor Lee suggested starting with a soft law review now and see how well those are aligned to the reception of NAMs as they stand.

Professor Timothy Malloy (University of California Los Angeles) presented on “Advancing alternative testing strategies in regulatory decision making”.

162. Professor Malloy started by discussing the impact of regulatory approaches in adoption of NAMs giving the example of EPA, ECHA and the UK FSA/COT Roadmap.

163. Informal law and formal law were explained with risk context and court interpretation.

164. Risk context is the legal side with different purposes for which NAMs may be used. Risk assessment and risk management need to consider whether quantitative risk assessments should be used or a more qualitative approach. Another context is whether to use NAMs and whether safer design can use alternative assessments for consumer products Malloy and Beryt (2016).

165. Formal law in most settings and existing law are not a barrier and are therefore agnostic. In turn, this encourages and mandates the use of NAMs. TSCA anticipated the use of non-animal testing and built it into statute and assumed that regulators would make great use of NAMs as they were developed. However, this hasn’t seemed to be a great driver elsewhere. A recent study on NAMs in REACH in the EU stated that there is some use of QSARs and in vitro assays but that they are not used as widely as they could have been and in fact, are rarely used.

166. For court interpretation, it involves court instances when challenged by industry. Courts are generally quite accepting of NAMs and accept that regulatory agencies must use the best available science, therefore, in theory, courts do not stand in the way of NAMs.

167. In informal law (Agency practices) it is expected that there will be a push based on freedom. Agencies have not taken advantage of the discretion. Social barriers can be quite a big barrier to changes. Different departments within an agency could be at odds.

168. In 2014 there was a survey on decision making of those involved in chemical risk assessment (Zaunbrecher et al., 2017). Other surveys were also done in Canada-with similar conclusions.

169. The survey discussed screening and prioritisation and the viable use of NAMs. There was a fairly sizable number (25%) that were using NAMs to make risk assessment decisions. And at what level of the risk assessment? E.g., screening/prioritisation, comparative risk assessment of alternative chemicals? Weight of Evidence (WoE)? Qualitative risk assessment? Dose finding studies, setting of NOAELs? The most important consideration is how viable is the use of NAMs in said technology and its applied use.

170. What are the barriers to adoption of NAMs for the validation process? Standardisation? social aspects? expected inherent disinclination for change? It is not really all about the science (but there may be a lack of funding, and inadequate facilities also play a role). It is more so with the socio-legal barriers (identified as priority and more common by survey). There is a resistance to change, slow validation process, regulatory acceptance, lack of standardisation. As well as the main ones (less common); legal challenge of results and of authority (not all agencies are going all in yet (so makes it look risky). However, we may need to consider risk/benefit?

171. The drivers of adoption (identified as priority/more common by survey) are the need for toxicology data by reducing test costs; demand by regulatory agencies; and ethical concerns. Less commonly identified drivers were demand by consumers; industry competition; and demand by NGOs. There needs to be recognition that this is a multidisciplinary undertaking with collaboration, outreach, and planning.

172. There needs to be much more proactive work by regulatory agencies to promote the use of NAMs which might make wider acceptance of it easier but there could also be a mandate on the use of NAMs. In summary, legal doesn’t stand in the way of NAMs adoption.

Dr Nicole C. Kleinstreuer presented on “Alternative Toxicological Methods and the ICCVAM strategic roadmap”.

173. The NTP Interagency Center for the Evaluation of Alternative Toxicological Methods (NICEATM) is an NTP office focused on the development and evaluation of alternatives to animal use for chemical safety testing. The topics in this section provide information about approaches used to replace, reduce, or refine animal use while ensuring that the toxic potential of the substances is appropriately characterized.

174. The Interagency Coordinating Committee on the Validation of Alternative Methods (ICCVAM) is a permanent committee of the National Institute of Environmental Health Sciences (NIEHS). ICCVAM is composed of representatives from 17 U.S. federal regulatory and research agencies. These regulatory and research agencies require, use, generate, or disseminate toxicological and safety testing information.

175. In January 2018, "A Strategic Roadmap for Establishing New Approaches to Evaluate the Safety of Chemicals and Medical Products in the United States" was published. ICCVAM coordinated the development of the strategic roadmap, a resource to guide the U.S. federal agencies and stakeholders seeking to adopt new approaches to safety and risk assessment of substances. The three pillars are: Utilization, Confidence and Technology.

176. There are 4C’s to underpin the roadmap: Communication, commitment, collaboration and confidence.

177. Progress has been achieved since the ICCVAM roadmap and consensus of all the ICCVAM agencies. Through the map the work is more efficient, and the approach is robust.

178. The Integrated chemical environment (ICE) dashboard looks at accessing data resources, quality tools or curated data. ICE provides curated data and tools to support chemical safety testing; Tox21 data are curated using analytical chemistry and assay-specific information; in vitro data are mapped to mechanistic targets and regulatory endpoints; in silico predictions are available for physical chemical and ADME properties; IVIVE tool uses ICE or user data to estimate in vivo exposure levels (Bell et al., 2020).

179. Tox21 and ToxCast screening data QC information flags to remove confidence values. Biological context is provided to the user.

180. Other tools like the Curve Surfer tool allows an individual to view and interact with concentration response curves from cHTS. The PBPK tool allows generation of predictions of tissue-specific chemical concentration profiles following a dosing event.

Session V Roundtable discussion

181. The participants welcomed and praised the organisers of the workshop for presenting a legal perspective to the adoption of NAMs.

182. There was discussion over UK law and whether we have the same formal (hard) and informal (soft) law as in the USA.

183. The UK courts do not reject novel techniques although they must be accredited or sufficiently sound, the factors to be considered are: “1. Whether the theory or technique can be or has been tested; 2. Whether the theory or technique has been subject to peer review and publication; 3. The known or potential rate of error or the existence of standards; and 4. Whether the theory or technique used has been generally accepted.” The Crown Prosecution Service, 2019, Prosecution Guidance, Expert Evidence.

184. It might also help to involve the public somehow. It is likely that there is always the risk that people think that using a different approach to EU means "watering down" standards. If demand from consumers for NAMs is low are the ethical concerns driven by scientists?

185. The GM crops issue shows what can happen if public acceptance is missing. Doesn't it depend on how the question is phrased, i.e., "would you prefer to use in vitro tests rather than animals?" as opposed to "what tests would you prefer to be done to ensure that your vaccine/medicine is safe?"

186. There is a need to demonstrate that standards will not be being lowered just because something different is being done, and indeed should be better using the best state of the art science.

187. People are risk averse, so they tend to focus on losses more than gains. Perhaps NAMs need to be promoted in a way that emphasises what can be lost if there is no encouragement for their use and acceptance, rather than the gains.

188. People are sceptical of anything that benefits industry, there appears to be the assumption that they are not interested in the health of the consumer.

189. Discussions about mRNA vaccines went in the same direction and participants discussed how learnings could be taken from the public reaction to those.

190. There were suggestions that the Scientific Advisory Committees could be bolder in requesting NAMs for mechanistic support and argument on AOPs and MOAs. For example, in regulated products where there are data requirements, data need to be generated by methods that are fit for purpose. There could be benefits if NAMs were to be accepted in this space. Accepted methods help the regulated community as they are pointing to a standard. Would there need to be a standard? Could NAMs be used without having a standard?

191. Generally, courts show deference to regulatory agencies; judges recognised that they are not trained in the roles of the regulatory agencies. The agency is following the science and whether it is fit for purpose for discharging their regulation. However, understandably people feel more comfortable with accepted methods.